In the rapidly evolving landscape of artificial intelligence, large language models (LLMs) like GPT-4 and Llama have transformed from mere text generators into sophisticated agents capable of planning, reasoning, and executing complex tasks. Yet, a fundamental limitation persists: these systems are inherently stateless. Each interaction resets the slate, forcing agents to rely solely on the immediate prompt’s context window—typically capped at a few thousand tokens. This amnesia hampers their ability to build genuine relationships, learn from past experiences, or maintain coherence over extended sessions. Imagine an AI personal assistant that forgets your dietary preferences after one conversation or a virtual tutor that repeats lessons without tracking progress. The result? Frustrating, inefficient interactions that fall short of human-like intelligence.

Enter the scalable long-term memory layer—a revolutionary architectural innovation designed to imbue AI agents with persistent, contextual memory across sessions. Systems like Mem0 exemplify this approach, providing a universal, self-improving memory engine that dynamically extracts, stores, and retrieves information without overwhelming computational resources. By addressing the “context window bottleneck,” these layers enable agents to evolve from reactive tools into proactive companions, retaining user-specific details, task histories, and evolving knowledge graphs. Research from Mem0 demonstrates a 26% accuracy boost for LLMs, alongside 91% lower latency and 90% token savings, underscoring the practical impact. As AI applications scale—from personalized healthcare bots to enterprise workflow automators—this memory paradigm isn’t just an enhancement; it’s a necessity for sustainable, intelligent systems. In this article, we explore the core mechanisms powering this breakthrough, from extraction to retrieval, revealing how it democratizes advanced AI for developers worldwide.

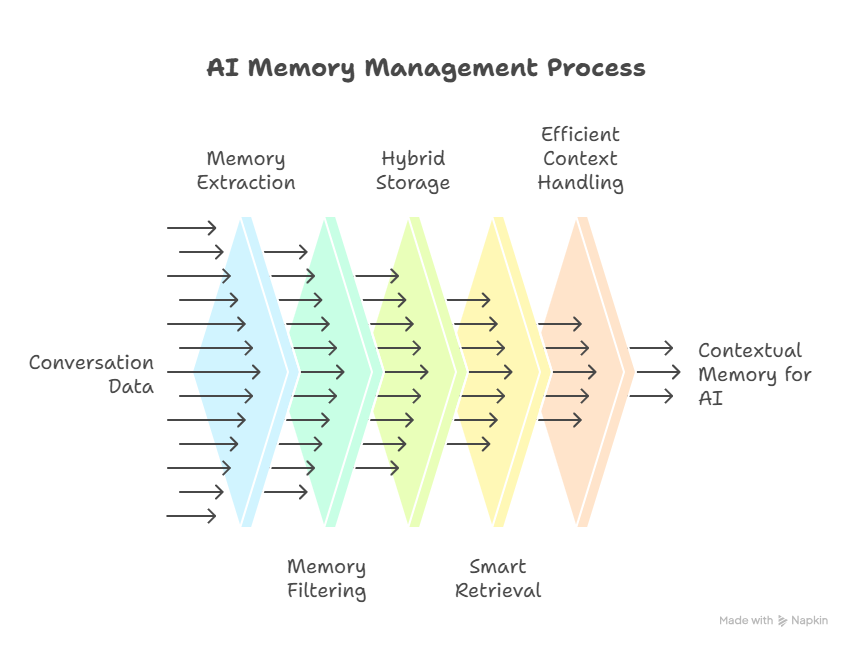

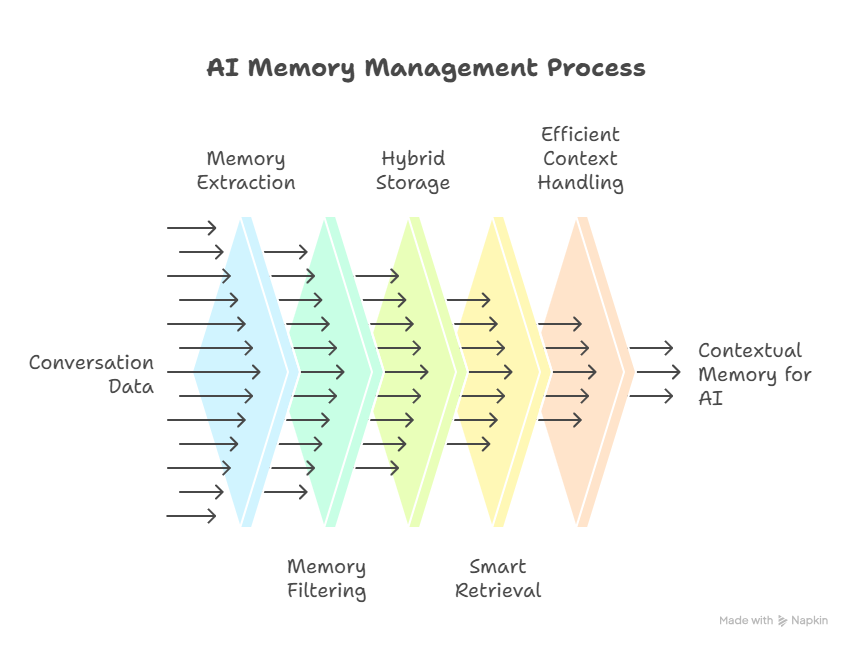

Memory Extraction: Harvesting Insights with Precision

At the heart of a robust long-term memory system lies the extraction phase, where raw conversational data is distilled into actionable knowledge. Traditional methods often dump entire chat logs into storage, leading to noise and inefficiency. Instead, modern memory layers leverage LLMs themselves as intelligent curators. During interactions, the agent prompts the LLM to scan dialogues and pinpoint key facts—entities like names, preferences, or events—while encapsulating surrounding context to avoid loss of nuance.

For instance, in a user query about travel plans, the LLM might extract: “User prefers vegan meals and avoids flights over 8 hours,” linking it to the full exchange for later disambiguation. This dual approach—fact isolation plus contextual preservation—ensures memories are both concise and rich. Tools like Mem0 automate this via agentic workflows, where extraction runs in real-time without interrupting the user flow. Similarly, frameworks such as A-MEM employ dynamic organization, using LLMs to categorize memories agentically, adapting to the agent’s evolving goals.

The beauty lies in scalability: extraction scales linearly with interaction volume, processing gigabytes of data into kilobytes of structured insights. Developers integrate this via simple APIs, as seen in LangChain’s memory modules, where callbacks trigger summarization before ingestion. By mimicking human episodic memory—selective yet holistic—these systems prevent overload from the outset, laying a foundation for lifelong learning in AI agents.

Memory Filtering & Decay: Pruning for Perpetual Relevance

As AI agents accumulate experiences, unchecked growth risks “memory bloat,” where irrelevant data clogs retrieval pipelines and inflates costs. Enter filtering and decay mechanisms, the janitorial crew of long-term memory. These processes actively curate the repository, discarding ephemera while reinforcing enduring value.

Filtering occurs post-extraction: LLMs score incoming memories for utility, flagging duplicates or low-relevance items based on semantic overlap. Decay, inspired by human forgetting curves, introduces time-based attenuation—older, unused memories fade in priority, perhaps via exponential weight reduction (e.g., score = initial_importance * e^(-λt), where λ tunes forgetfulness). Redis-based systems, for example, implement TTL (time-to-live) for short-term entries, automatically expiring them to maintain efficiency.

In practice, Mem0’s architecture consolidates related concepts, merging “User likes Italian food” with “User enjoyed pasta last trip” into a single, evolved node. This not only curbs storage demands—reducing from terabytes to manageable datasets—but also enhances accuracy by focusing on high-signal content. Studies show such pruning boosts agent performance by 20-30% in multi-turn tasks, as filtered memories align better with current contexts. For production agents, like those in Amazon Bedrock, hybrid short- and long-term filtering organizes preferences persistently, ensuring decay doesn’t erase critical user data. Ultimately, these safeguards transform memory from a hoarder into a curator, enabling agents to “forget” wisely and scale indefinitely.

Hybrid Storage: Vectors Meet Graphs for Semantic Depth

Storing extracted memories demands a balance of speed, flexibility, and structure—enter hybrid storage, fusing vector embeddings for fuzzy semantic search with graph databases for relational precision. Vectors, generated via models like Sentence-BERT, encode memories as high-dimensional points, enabling cosine-similarity lookups for “similar” concepts (e.g., retrieving “beach vacation” for a “coastal getaway” query). Graphs, conversely, model interconnections—nodes for facts, edges for relationships like “user_prefers → vegan → linked_to → travel.”

This synergy shines in systems like Papr Memory, where vector indices handle initial broad queries, and graph traversals refine paths (e.g., “User’s allergy → shrimp → avoids → seafood restaurants”). MemGraph’s HybridRAG exemplifies this, combining vectors for similarity with graphs for entity resolution, yielding 15-25% better recall in knowledge-intensive tasks.

Scalability is key: vector stores like Pinecone or FAISS manage billions of embeddings efficiently, while graph DBs (Neo4j, TigerGraph) handle dynamic updates without recomputation. For AI agents, this hybrid unlocks contextual depth—recalling not just “what” but “why” and “how it connects”—fostering emergent behaviors like proactive suggestions. As one analysis notes, pure vectors falter on causality; graphs alone on semantics; together, they approximate human associative recall.

Smart Retrieval: Relevance, Recency, and Importance in Harmony

Retrieval is where memory layers prove their mettle: surfacing the right snippets at the right time without flooding the LLM’s input. Smart retrieval algorithms weigh three pillars—relevance (semantic match to query), recency (temporal proximity), and importance (pre-assigned scores from extraction).

A typical pipeline: Query embeds into a vector, fetches top-k candidates via hybrid search, then re-ranks using a lightweight scorer (e.g., weighted sum: 0.4relevance + 0.3recency + 0.3*importance). Mem0’s retrieval, for instance, considers user-specific graphs to prioritize personalized edges, achieving sub-second latencies even at scale. In generative agents, this mirrors human reflection: an LLM prompt like “Retrieve memories where importance > 0.7 and recency < 30 days” ensures focused recall.

Advanced variants, like ReasoningBank, layer multi-hop reasoning over retrieval, chaining memories for deeper insights. Results? Agents exhibit 40% fewer hallucinations, as contextual anchors ground responses. This orchestration turns passive storage into an active oracle, empowering agents to anticipate needs.

Efficient Context Handling: Token Thrift for Sustainable AI

LLM token limits—often 128k for frontier models—pose a stealthy foe to memory-rich agents. Efficient context handling mitigates this by surgically injecting only pertinent snippets, slashing usage by up to 90%. Post-retrieval, memories compress via summarization (LLM-condensed versions) or hierarchical selection—top-3 by score, concatenated with delimiters.

Techniques abound: RAG variants prioritize external fetches; adaptive windows expand only for high-importance threads. Anthropic’s context engineering emphasizes “high-signal sets,” curating inputs to maximize utility per token. In Mem0, this yields cost savings rivaling fine-tuning, without retraining. The payoff: faster inferences, lower bills, and greener AI—vital as agents proliferate.

The Horizon: Agents That Truly Evolve

A scalable long-term memory layer isn’t merely additive; it’s transformative, birthing AI that learns, adapts, and endears. From Mem0’s open-source ethos to enterprise integrations like MongoDB’s LangGraph store, these systems herald an era of context-aware autonomy. Challenges remain—privacy in persistent data, bias amplification—but with ethical safeguards, the potential is boundless: empathetic therapists, tireless researchers, lifelong allies. As we stand on October 14, 2025, one truth resonates: memory isn’t just recall; it’s the soul of intelligence. Developers, it’s time to remember—and build accordingly.